- 1. 环境准备

- 2. 安装 Docker CE

- 3. 安装 Etcd 3.3.17

- 4. 安装 Kubernetes

- 5. Kubernetes 核心 Addons部署

1. 环境准备

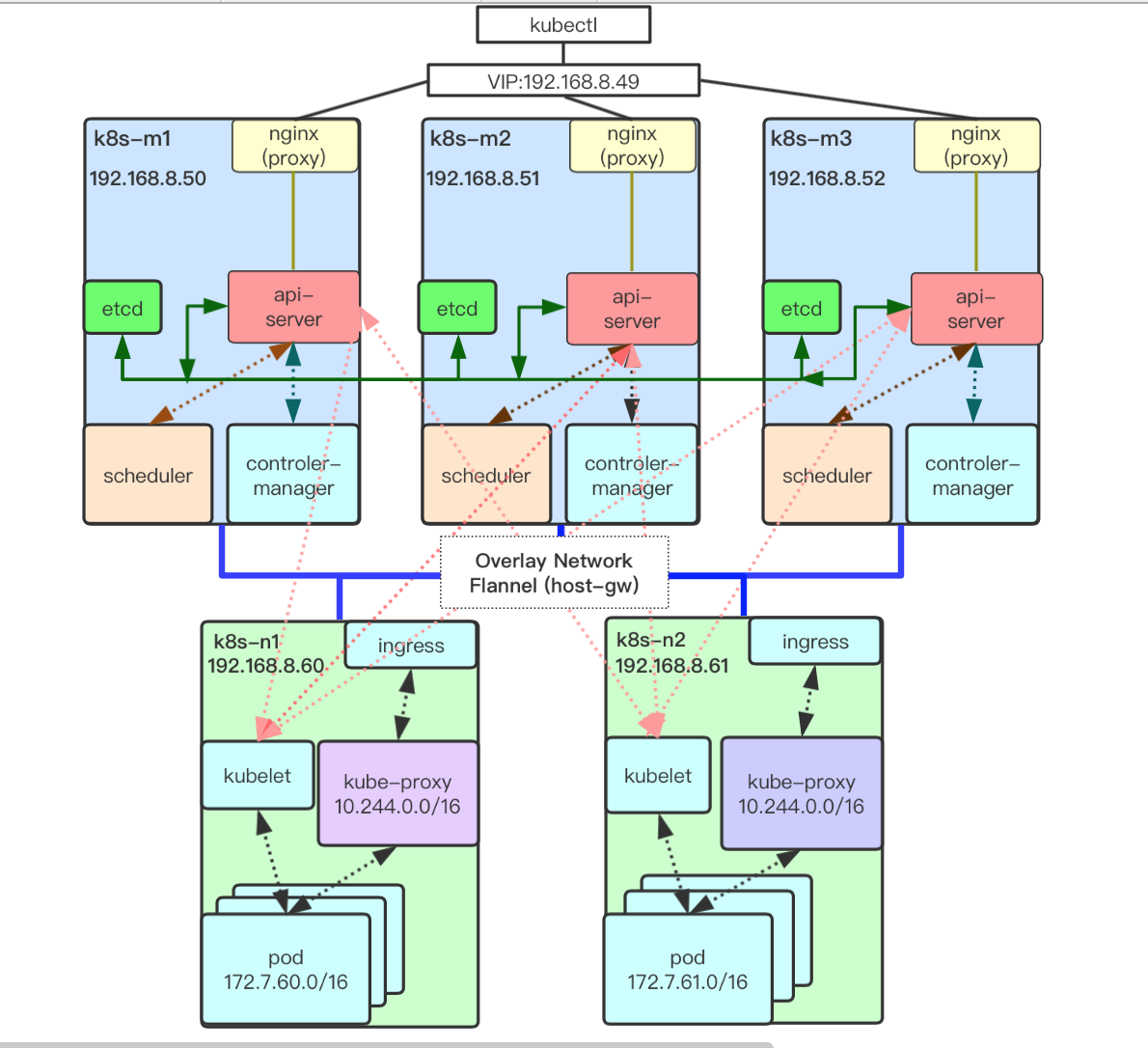

1.1. 环境介绍

| IP | Role | Hostname | CPU | Memory | Service |

|---|---|---|---|---|---|

| 192.168.8.50 | master | k8s-m1 | 4 | 2G |

EtcdAPI-Server

|

| 192.168.8.51 | master | k8s-m2 | 4 | 2G |

EtcdAPI-Server

|

| 192.168.8.52 | master | k8s-m3 | 4 | 2G |

EtcdAPI-Server

|

| 192.168.8.60 | node | k8s-n1 | 4 | 2G | |

| 192.168.8.61 | node | k8s-n2 | 4 | 2G |

1.2. 软件信息

| name | version |

|---|---|

| kubernetes | v1.15.1 |

| Etcd | v3.3.17 |

| Flannel | v0.11.0 |

| Docker | 19.03.8 |

1.3. 网络规划

| 网络名称 | 网段 |

|---|---|

| Cluster IP CIDR | 10.244.0.0/16 |

| Service Cluster IP CIDR | 172.7.0.0/16 |

| Service DNS IP | 10.96.0.10 |

| DNS DN | cluster.local |

| Kubernetes API VIP | 192.168.8.49 |

| Kubernetes Ingress VIP | 192.168.8.48 |

1.4. 初始化系统环境

初始化系统操作每台主机都要执行

-

修改

hosts,cat > /etc/hosts << EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.8.50 k8s-m1 192.168.8.51 k8s-m2 192.168.8.52 k8s-m3 192.168.8.60 k8s-n1 192.168.8.61 k8s-n2 EOF -

配置免登陆

# 一直回车,生成 .ssh 下的公钥和私钥 $ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:ffKsQoXKzZS8hrTnnB1Xu9GnCnoNm2BXpTAYwFDiJpA root@k8s-m1 The key's randomart image is: +---[RSA 2048]----+ |.. o+o..o | |E. . .. . o . | | . o . o o o | | o . =.. o . | | o BSoo... o | | = X +=. o o| | B =.Bo +.| | =.=o. o | | .o. .. | +----[SHA256]-----+拷贝公钥

ssh-copy-id k8s-m1 ssh-copy-id k8s-m2 ssh-copy-id k8s-m3 ssh-copy-id k8s-n1 ssh-copy-id k8s-n2 -

设置

hostnamehostnamectl --static set-hostname k8s-m1 -

关闭防火墙、

SELinux、Swap交换分区sed -ri '/^[^#]*SELINUX=/s#=.+$#=disabled#' /etc/selinux/config sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab systemctl disable --now firewalld NetworkManager setenforce 0 swapoff -a && sysctl -w vm.swappiness=0 -

安装必要软件

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak curl http://mirrors.aliyun.com/repo/Centos-7.repo -o /etc/yum.repos.d/CentOS-Base.repo yum clean all && yum makecache fast yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wgetvimnet-tools git vim wget iptables-services systemctl start iptables && systemctl enable iptables iptables -F && service iptables save -

设置 系统时间/日志

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF [Journal]# 持久化保存到磁盘 Storage=persistent # 压缩历史日志 Compress=yes SyncIntervalSec=5m RateLimitInterval=30s RateLimitBurst=1000 # 最大占用空间 10G SystemMaxUse=10G # 单日志文件最大 200M SystemMaxFileSize=200M # 日志保存时间 2 周 MaxRetentionSec=2week # 不将日志转发到 syslogForwardToSyslog=no EOF # 设置系统时区为中国/上海 timedatectl set-timezone Asia/Shanghai # 将当前的 UTC 时间写入硬件时钟 timedatectl set-local-rtc 0 # 重启依赖于系统时间的服务 systemctl restart rsyslog && systemctl restart crond systemctl stop postfix && systemctl disable postfix # 设置rsyslogd & systemd journald mkdir /var/log/journal & &mkdir /etc/systemd/journald.conf.d systemctl restart systemd-journald -

安装

cfssl(此步骤 只在k8s-m1主机执行)mkdir -p /opt/cert wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo -

升级内核

cat <<EOF > /etc/sysctl.d/k8s.conf # https://github.com/moby/moby/issues/31208 # ipvsadm -l --timout # 修复ipvs模式下长连接timeout问题 小于900即可 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_intvl = 30 net.ipv4.tcp_keepalive_probes = 10 net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1 net.ipv4.neigh.default.gc_stale_time = 120 net.ipv4.conf.all.rp_filter = 0 net.ipv4.conf.default.rp_filter = 0 net.ipv4.conf.default.arp_announce = 2 net.ipv4.conf.lo.arp_announce = 2 net.ipv4.conf.all.arp_announce = 2 net.ipv4.ip_forward = 1 net.ipv4.tcp_max_tw_buckets = 5000 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 1024 net.ipv4.tcp_synack_retries = 2 # 要求iptables不对bridge的数据进行处理 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-arptables = 1 net.netfilter.nf_conntrack_max = 2310720 fs.inotify.max_user_watches=89100 fs.may_detach_mounts = 1 fs.file-max = 52706963 fs.nr_open = 52706963 vm.swappiness = 0 vm.overcommit_memory=1 vm.panic_on_oom=0 EOF sysctl --system # 升级系统内核 rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm yum --enablerepo=elrepo-kernel install -y kernel-lt # 设置开机从新内核启动 grub2-set-default 0 -

重启系统

reboot

2. 安装 Docker CE

所有机器安装 Docker CE 版本的容器引擎,

在官方查看K8s支持的docker版本https://github.com/kubernetes/kubernetes 里进对应版本的changelog里搜The list of validated docker versions remain

使用官方脚本安装 Docker 18.09

export VERSION=18.09

curl -fsSL "https://get.docker.com/" | bash -s -- --mirror Aliyun

安装完成后 配置 阿里云加速与bip信息

为了区别每个节点不同的容器地址,bip 最好设置为 172.7.xxx(节点信息).1/24

这样为每个节点划分一个单独的c类网段

mkdir -p /etc/docker/

cat>/etc/docker/daemon.json<<EOF

{

"graph": "/data/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://fz5yth0r.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"live-restore": true,

"log-driver": "json-file",

"bip":"172.7.50.1/24",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}

EOF

设置开机启动和命令补全

yum install -y epel-release bash-completion && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/

systemctl enable --now docker

3. 安装 Etcd 3.3.17

k8s-m1(192.168.8.50) 作为证书签发服务,所有证书签发操作都在

k8s-m1进行,然后分发到需要的服务器上

3.1. 配置 etcd 证书

3.1.1. 生成 etcd server 证书用到的json请求文件

# 创建证书签发目录

$ mkdir -p /opt/cert/

$ cd /opt/cert/

$ cat << EOF > ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

解释:

default 默认策略: 证书过期时间 87600h 10年

etcd 策略,指定证书的用途

signing, 表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE

server auth:表示 client 可以用该 CA 对 server 提供的证书进行验证

client auth:表示 server 可以用该 CA 对 client 提供的证书进行验证

3.1.2. 创建 etcd ca 证书配置文件

$ cat << EOF > ca-csr.json

{

"CN": "k8s",

"hosts":[

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "kubernetes",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}EOF

3.1.3. 创建 etcd peer 证书文件

etcd-peer-csr.json 中 hosts 字段 需改为 实际环境的 主机地址

cat << EOF | tee etcd-peer-csr.json

{

"CN": "k8s-etcd",

"hosts": [

"192.168.8.50",

"192.168.8.51",

"192.168.8.52"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "kubernetes",

"OU": "ops"

}

]

}

EOF

3.1.4. 生成 etcd ca 证书和私钥

$ cfssl gencert -initca ca-csr.json | cfssljson -bare ca

$ ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server-csr.json

3.1.5. 生成etcd server 证书和私钥

$ cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json|cfssljson -bare etcd-peer

$ ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd-peer.csr etcd-peer-csr.json etcd-peer-key.pem etcd-peer.pem

3.1.6. 分发 证书、私钥

将 ca.pemetcd-peer-key.pemetcd-peer.pem 拷贝到 所有 etcd 主机

for NODE in k8s-m1 k8s-m2 k8s-m3;

do

echo "--- $NODE ---";

ssh $NODE "mkdir -p /opt/etcd/certs";

scp -r ca.pem etcd-peer-key.pem etcd-peer.pem $NODE:/opt/etcd/certs;

done

3.2. 安装配置 etcd

3.2.1. 下载etcd

下载地址 Github

在每台Etcd主机执行:

# 下载

$ wget https://github.com/etcd-io/etcd/releases/download/v3.3.17/etcd-v3.3.17-linux-amd64.tar.gz

# 解压

$ tar zxf etcd-v3.3.17-linux-amd64.tar.gz

$ cd etcd-v3.3.17-linux-amd64

# 创建需要目录

$ mkdir -p /opt/etcd/bin /opt/etcd/certs /data/etcd /data/logs/etcd-server

# copy 执行文件

$ cp etcd etcdctl /opt/etcd/bin/

# 添加etcd用户

$ useradd -s /sbin/nologin -M etcd

# 安装 supervisor 用来管理 etcd 等服务

$ yum -y install supervisor

$ systemctl start supervisord && systemctl enable supervisord

3.2.2. 创建 etcd 启动文件

所有etcd 主机创建 /opt/etcd/etcd-server-startup.sh

注意:

除

initial-cluster字段外,将对应ip 改为本主机ip地址

#!/bin/bash

/opt/etcd/bin/etcd \

--name k8s-m1 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.8.50:2380 \

--listen-client-urls https://192.168.8.50:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.8.50:2380 \

--advertise-client-urls https://192.168.8.50:2379,http://127.0.0.1:2379 \

--initial-cluster k8s-m1=https://192.168.8.50:2380,k8s-m2=https://192.168.8.51:2380,k8s-m3=https://192.168.8.52:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

创建 supervisor 管理文件

$ cat /etc/supervisord.d/etcd-server.ini

[program:etcd-server]

command=/opt/etcd/etcd-server-startup.sh

numprocs=1

directory=/opt/etcd

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=etcd

redirect_stderr=true

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

更新 supervisor服务

$ supervisorctl update

$ supervisorctl status

执行完毕后查看 etcd 集群状态

$ /opt/etcd/bin/etcdctl cluster-health

member 43e910c7e955fb4 is healthy: got healthy result from http://127.0.0.1:2379

member 246fcb0107b3587f is healthy: got healthy result from http://127.0.0.1:2379

member f0fcaebd3ebb8817 is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy

$ /opt/etcd/bin/etcdctl member list

43e910c7e955fb4: name=k8s-m1 peerURLs=https://192.168.8.50:2380 clientURLs=http://127.0.0.1:2379,https://192.168.8.50:2379 isLeader=false

246fcb0107b3587f: name=k8s-m2 peerURLs=https://192.168.8.51:2380 clientURLs=http://127.0.0.1:2379,https://192.168.8.51:2379 isLeader=true

f0fcaebd3ebb8817: name=k8s-m3 peerURLs=https://192.168.8.52:2380 clientURLs=http://127.0.0.1:2379,https://192.168.8.52:2379 isLeader=false

4. 安装 Kubernetes

下载地址:

https://dl.k8s.io/v1.15.1/kubernetes-server-linux-amd64.tar.gz

https://dl.k8s.io/v1.15.1/kubernetes-client-linux-amd64.tar.gz

解压文件、删除不必要文件:

tar xf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

rm -f *.tar

rm -f *_tag

同步二进制文件到所有k8s-master 节点 /opt 目录下

for NODE in k8s-m1 k8s-m2 k8s-m3;

do

echo "--- $NODE---"

scp -r kubernetes ${NODE}:/opt/

done

4.1. 安装 API-Server

4.1.1. 配置证书

在证书服务器

k8s-m1/opt/cert/目录执行

4.1.1.1. 签发 client 证书, api-server -> etcd 集群通信

client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "kubernetes",

"OU": "ops"

}

]

}

生成证书

cfssl gencert -ca ca.pem -ca-key ca-key.pem -config ca-config.json -profile client client-csr.json| cfssljson -bare client

$ ls client*

client.csr client-csr.json client-key.pem client.pem

4.1.1.2. 签发 server 端 证书

apiserver-csr.json

cat << EOF| tee k8s-apiserver

{

"CN":"k8s-apiserver",

"hosts": [

"127.0.0.1",

"10.244.0.1",

"192.168.8.48",

"192.168.8.49",

"192.168.8.50",

"192.168.8.51",

"192.168.8.52",

"192.168.8.53",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "API Server"

}

]

}

EOF

生成证书

cfssl gencert -ca ca.pem -ca-key ca-key.pem -config ca-config.json -profile server apiserver-csr.json| cfssljson -bare apiserver

$ ls apiserver*

apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem

4.1.2. 分发证书

在所有k8s master 主机执行

cd /opt/kubernetes/server/bin

mkdir cert conf

cd cert

scp k8s-m1:/opt/certs/apiserver.pem .

scp k8s-m1:/opt/certs/apiserver-key.pem .

scp k8s-m1:/opt/certs/ca.pem .

scp k8s-m1:/opt/certs/ca-key.pem .

scp k8s-m1:/opt/certs/client.pem .

scp k8s-m1:/opt/certs/client-key.pem .

创建 /opt/kubernetes/server/bin/conf/audit.yaml 审计服务

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

4.1.3. 创建启动脚本

/opt/kubernetes/server/bin/kube-apiserver.sh

cat > /opt/kubernetes/server/bin/kube-apiserver.sh <<EOF

#!/bin/bash

./kube-apiserver \

--apiserver-count 3 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit.log \

--audit-policy-file ./conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./cert/ca.pem \

--requestheader-client-ca-file ./cert/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile ./cert/ca.pem \

--etcd-certfile ./cert/client.pem \

--etcd-keyfile ./cert/client-key.pem \

--etcd-servers https://192.168.8.50:2379,https://192.168.8.51:2379,https://192.168.8.52:2379\

--service-account-key-file ./cert/ca-key.pem \

--service-cluster-ip-range 10.244.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./cert/client.pem \

--kubelet-client-key ./cert/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./cert/apiserver.pem \

--tls-private-key-file ./cert/apiserver-key.pem \

--v 2

EOF

/etc/supervisord.d/api-server.ini

cat > /etc/supervisord.d/api-server.ini <<EOF

[program:api-server]

command=/opt/kubernetes/server/bin/kube-apiserver.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/api-server/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

EOF

创建目录,启动服务

mkdir -p /data/logs/api-server /data/logs/kubernetes/kube-apiserver

supervisorctl update

supervisorctl status

4.2. 安装主控节点反向代理

本节在主控节点安装配置

keepalived、nginx反向代理服务

4.2.1. 安装配置nginx

nginx 作为 四层反向代理, 监听 7443 端口,转发到 api-server 节点的 6443 端口

安装nginx

yum -y install nginx-1.16.1

修改配置文件

cat >/etc/nginx/nginx.conf <<EOF

user nginx;

worker_processes 2;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

gzip on;

}

stream {

upstream api-server {

#hash $remote_addr consistent;

server 192.168.8.50:6443 weight=1 max_fails=3 fail_timeout=10s;

server 192.168.8.51:6443 weight=1 max_fails=3 fail_timeout=10s;

server 192.168.8.52:6443 weight=1 max_fails=3 fail_timeout=10s;

}

server {

listen 7443;

proxy_pass api-server;

proxy_timeout 600s;

proxy_connect_timeout 30s;

}

}

EOF

配置supervisor

mkdir -p /data/logs/nginx

cat > /etc/supervisord.d/nginx.ini <<EOF

[program: nginx]

command=/usr/sbin/nginx -g 'daemon off;'

autorestart=true

autostart=true

stderr_logfile=/data/logs/nginx/error.log

stdout_logfile=/data/logs/nginx/stdout.log

environment=ASPNETCORE_ENVIRONMENT=Production

user=root

stopsignal=INT

startsecs=10

startretries=5

stopasgroup=true

EOF

启动服务

supervisorctl update

supervisorctl status

4.2.2. 安装配置 keepalived

配置 keepalived ,vip 为 192.168.8.49

安装keepalived

yum -y install keepalived

修改配置 master主机

$ cat > /etc/keepalived/keepalived.conf<<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nginx { 这里是检测实用的脚本配置文件,

script "/etc/keepalived/check_nginx.sh" 这个是脚本if.sh 我写的比较简单 下面会贴出

interval 2 这个是检测间隔时间 为2秒

weight -20 这个是权限降低20 不知道为什么 我的好像是不起作用 我不写它也能实用

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

# 设置优先级

priority 100

# 检查间隔

advert_int 1

#设置为不抢占,说明:这个配置只能在BACKUP主机上面设置

#nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.8.49

}

track_script {

chk_nginx

}

}

EOF

修改配置 backup 主机

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nginx { 这里是检测实用的脚本配置文件,

script "/etc/keepalived/check_nginx.sh" 这个是脚本if.sh 我写的比较简单 下面会贴出

interval 2 这个是检测间隔时间 为2秒

weight -20 这个是权限降低20 不知道为什么 我的好像是不起作用 我不写它也能实用

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.8.49

}

track_script {

chk_nginx

}

}

EOF

创建检查脚本/etc/keepalived/check_nginx.sh

cat > /etc/keepalived/check_nginx.sh <<EOF

#!/bin/bash

IsListen=$(ss -lnp|grep 7443|grep -v grep |wc -l)

if [ $IsListen -eq 0 ];then

echo "Port 7443 Is Not Used, End"

exit 1

fi

启动服务

systemctl enable keepalived

systemctl start keepalived

检查服务

在master 主机检查 vip是否启动

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4a:89:b6 brd ff:ff:ff:ff:ff:ff

inet 192.168.8.50/24 brd 192.168.8.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.8.49/32 scope global eth0

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:4e:c1:ce:2f brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4.3. 部署 kube-scheduler

在所有k8s master 主机执行

创建启动脚本

$ cat > /opt/kubernetes/server/bin/kube-scheduler.sh <<EOF

#!/bin/bash

./kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2

EOF

创建supervisor配置

$ cat /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet]

command=/opt/kubernetes/server/bin/kubelet.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-kubelet/scheduler.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

启动服务

mkdir -p /data/logs/kubernetes/kube-kubelet

supervisorctl update

supervisorctl status

4.4. 部署 kube-controller-manager

在所有k8s master 主机执行

创建启动脚本

$ cat > kube-controller-manager.sh<<EOF

#!/bin/sh

./kube-controller-manager \

--cluster-cidr 172.7.0.0/16 \

--leader-elect true \

--log-dir /data/logs/kubernetes/kube-controller-manager \

--master http://127.0.0.1:8080 \

--service-account-private-key-file ./cert/ca-key.pem \

--service-cluster-ip-range 10.244.0.0/16 \

--root-ca-file ./cert/ca.pem \

--v 2

EOF

创建supervisor服务配置

$ cat > /etc/supervisord.d/kube-controller-manager.ini <<EOF

[program:kube-controller-manager]

command=/opt/kubernetes/server/bin/kube-controller-manager.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

EOF

启动服务

mkdir -p /data/logs/kubernetes/kube-controller-manager/

supervisorctl update

supervisorctl status

4.5. 部署 kubelet

4.5.1. 生成证书

所有证书在

k8s-m1节点生成,然后分发到需要的主机

创建 kubelet-csr.json 文件,其中 hosts字段中的 IP地址为 需要部署kubelet 服务的节点IP

cd /opt/cert

cat > kubelet-csr.json <<EOF

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"192.168.8.48",

"192.168.8.49",

"192.168.8.50",

"192.168.8.51",

"192.168.8.52",

"192.168.8.53",

"192.168.8.54",

"192.168.8.55",

"192.168.8.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "API Server"

}

]

}

EOF

生成证书

$ cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json| cfssljson -bare kubelet

$ ls kubelet*

kubelet.csr kubelet-csr.json kubelet-key.pem kubelet.pem

分发证书

for NODE in k8s-m1 k8s-m2 k8s-m3

do

scp kubelet-key.pem kubelet.pem $NODE:/opt/kubernetes/server/bin/cert/

done

4.5.2. 生成 kubelet.kubeconfig 配置文件

在

k8s-m1上执行

#!/bin/bash

VIP="192.168.8.49"

cd /opt/kubernetes/server/bin/conf

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://${VIP}:7443 \

--kubeconfig=kubelet.kubeconfig

kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/server/bin/cert/client.pem \

--client-key=/opt/kubernetes/server/bin/cert/client-key.pem \

--embed-certs=true \

--kubeconfig=kubelet.kubeconfig

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

kubectl config use-context myk8s-context \

--kubeconfig=kubelet.kubeconfig

这时候会在 /opt/kubernetes/server/bin/conf 目录中生成 kubelet.kubeconfig

将 kubelet.kubeconfig 分发到其他 主机

$ scp /opt/kubernetes/server/bin/conf/kubelet.kubeconfig k8s-m2:/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

$ scp /opt/kubernetes/server/bin/conf/kubelet.kubeconfig k8s-m3:/opt/kubernetes/server/bin/conf/kubelet.kubeconfig

4.5.3. 进行角色绑定

创建 k8s-node.yaml

将 k8s-node用户 具有成为运算节点的权限

$ cat > /opt/kubernetes/server/bin/conf/k8s-node.yaml<<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

EOF

执行

$ kubectl apply -f /opt/kubernetes/server/bin/conf/k8s-node.yaml

4.5.4. 创建启动脚本

/opt/kubernetes/server/bin/kubelet.sh

注意修改 --hostname-override 字段

cat > /opt/kubernetes/server/bin/kubelet.sh <<EOF

#!/bin/bash

./kubelet \

--cgroup-driver systemd \

--cluster-dns 10.244.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./cert/ca.pem \

--tls-cert-file ./cert/kubelet.pem \

--tls-private-key-file ./cert/kubelet-key.pem \

--hostname-override k8s-m1 \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ./conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--root-dir /data/kubelet

EOF

supervisor 配置文件 /etc/supervisord.d/kube-kubelet.ini

$ cat > /etc/supervisord.d/kube-kubelet.ini <<EOF

[program:kube-kubelet]

command=/opt/kubernetes/server/bin/kubelet.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-kubelet/scheduler.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

EOF

4.5.5. 启动服务

mkdir /data/logs/kubernetes/kube-kubelet/

supervisorctl update

supervisorctl status

$ kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-m1 Ready - 24h v1.15.1

k8s-m2 Ready - 24h v1.15.1

k8s-m3 Ready - 24h v1.15.1

# 修改label

$ kubectl label node k8s-m1 node-role.kubernetes.io/master=

$ kubectl label node k8s-m1 node-role.kubernetes.io/node=

$ kubectl label node k8s-m2 node-role.kubernetes.io/master=

$ kubectl label node k8s-m2 node-role.kubernetes.io/node=

$ kubectl label node k8s-m3 node-role.kubernetes.io/master=

$ kubectl label node k8s-m3 node-role.kubernetes.io/node=

$ kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-m1 Ready master,node 24h v1.15.1

k8s-m2 Ready master,node 24h v1.15.1

k8s-m3 Ready master,node 24h v1.15.1

4.6. 部署 kube-proxy

4.6.1. 签发证书

在

k8s-m1上签发证书,然后分发到其他节点

创建 kube-proxy-csr.json文件

$ cd /opt/cert

$ cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "kubernetes",

"OU": "ops"

}

]

}

EOF

签发证书

$ cfssl gencert -ca ca.pem -ca-key ca-key.pem -config ca-config.json -profile client kube-proxy-csr.json|cfssljson -bare kube-proxy-client

$ ls kube-proxy-client*

kube-proxy-client.csr kube-proxy-client-key.pem kube-proxy-client.pem

4.6.2. 分发证书

所有节点

cd /opt/kubernetes/server/bin/cert

scp k8s-m1:/opt/cert/kube-proxy-client.pem .

scp k8s-m1:/opt/cert/kube-proxy-client-key.pem .

4.6.3. 创建 kube-proxy.kubeconfig

在

k8s-m1执行

#!/bin/bash

VIP=192.168.8.49

cd /opt/kubernetes/server/bin/conf

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://${VIP}:7443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem \

--client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

在其他节点将 kube-proxy.kubeconfig 拷贝过来

$ cd /opt/kubernetes/server/bin/conf

scp k8s-m1:/opt/kubernetes/server/bin/conf/kube-proxy.kubeconfig .

4.6.4. 加载 ipvs 内核模块

所有节点创建 ipvs.sh 脚本 ,然后执行

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

查看内核模块

$ lsmod |grep ip_vs

ip_vs_wrr 16384 0

ip_vs_wlc 16384 0

ip_vs_sh 16384 0

ip_vs_sed 16384 0

ip_vs_pe_sip 16384 0

nf_conntrack_sip 28672 1 ip_vs_pe_sip

ip_vs_ovf 16384 0

ip_vs_nq 16384 0

....

4.6.5. 创建启动脚本

注意修改每个节点的 --hostname-override 字段

$ cat > /opt/kubernetes/server/bin/kube-proxy.sh <<EOF

#!/bin/bash

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override k8s-m1 \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig ./conf/kube-proxy.kubeconfig

EOF

$ chmod +x /opt/kubernetes/server/bin/kube-proxy.sh

创建supervisor配置

$ cat > /etc/supervisord.d/kube-proxy.ini <<EOF

[program:kube-proxy]

command=/opt/kubernetes/server/bin/kube-proxy.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-proxy/kube-proxy.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

EOF

$ mkdir -p /data/logs/kubernetes/kube-proxy/

4.6.6. 启动服务

supervisorctl update

supervisorctl status

$ ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.244.0.1:443 nq

-> 192.168.8.50:6443 Masq 1 0 0

-> 192.168.8.51:6443 Masq 1 0 0

-> 192.168.8.52:6443 Masq 1 0 0

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.244.0.1 <none> 443/TCP 2d19h

4.6.7. 使用 TLS Bootstrapping 添加新Node节点

集群启用RBAC后各组件之间的通信是基于TLS加密的,client和server需要通过证书中的CN,O来确定用户的user和group,因此client和server都需要持有有效证书

- node节点的kubelet需要和master节点的apiserver通信,此时kubelet是一个client需要申请证书

- node节点的kubelet启动为守住进程通过10250端口暴露自己的状态,此时kubelet是一个server需要申请证书

-

创建 Token auth file

Token可以是任意的包含128 bit的字符串,可以使用安全的随机数发生器生成。

cd /opt/kubernetes/server/bin/conf export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ') cat > token.csv <<EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" EOF mv token.csv /opt/kubernetes/server/bin/conf/BOOTSTRAP_TOKEN 将被写入到 kube-apiserver 使用的 token.csv 文件和 kubelet 使用的 bootstrap.kubeconfig 文件,如果后续重新生成了 BOOTSTRAP_TOKEN,则需要:

-

更新 token.csv 文件,分发到所有机器 (master 和 node)的 /etc/kubernetes/ 目录下,分发到node节点上非必需;

- 重新生成 bootstrap.kubeconfig 文件,分发到所有 node 机器的 /etc/kubernetes/ 目录下;

- 重启 kube-apiserver 和 kubelet 进程;

- 重新 approve kubelet 的 csr 请求;

-

-

允许 kubelet-bootstrap 用户创建首次启动的 CSR 请求

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap -

创建kubelet bootstrapping kubeconfig文件

export KUBE_APISERVER="https://192.168.8.49:7443" # 设置集群参数 kubectl config set-cluster myk8s \ --certificate-authority=./cert/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig # 设置上下文参数 kubectl config set-context default \ --cluster=myk8s \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=bootstrap.kubeconfig -

修改master 节点

kube-apiserver.sh启动脚本,添加token.csv在 启动脚本中启用 bootstrap token认证,并指定

token.csv路径--enable-bootstrap-token-auth --token-auth-file ./conf/token.csv重启kube-apiserver 服务

-

将

kubeletkube-proxy文件拷贝到新节点中cp kubelet kube-proxy k8s-n4:/usr/local/bin -

创建kubelet的配置文件和kubelet.service文件,此处采用创建脚本kubelet.sh的一键执行。

将 节点IP、集群DNS、节点名称改为与实际环境对应的数值

$ cat > kubelet.sh <<EOF #!/bin/bash NODE_ADDRESS="192.168.8.26" DNS_SERVER_IP="10.244.0.2" NODE_NAME="k8s-n4" cat <<EOF >/etc/kubernetes/kubelet KUBELET_ARGS="--v=2 \\ --address=$NODE_ADDRESS \\ --cgroup-driver systemd \\ --runtime-cgroups=/systemd/system.slice \\ --kubelet-cgroups=/systemd/system.slice \\ --image-gc-high-threshold 20 \\ --image-gc-low-threshold 10 \\ --log-dir /data/logs/kubernetes/kube-kubelet \\ --hostname-override=${NODE_NAME} \\ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \\ --cert-dir=/etc/kubernetes/ssl \\ --cluster-dns=${DNS_SERVER_IP} \\ --cluster-domain=cluster.local \\ --fail-swap-on=false \\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF cat <<EOF >/usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=-/etc/kubernetes/kubelet ExecStart=/usr/local/bin/kubelet \$KUBELET_ARGS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet && systemctl status kubelet运行脚本

chmod +x kubelet.sh ./kubelet.sh脚本生成

/etc/kubernetes/kubelet/usr/lib/systemd/system/kubelet.service两个文件,用来管理kubelet服务 -

通过kubelet的TLS证书请求

kubelet 首次启动时向 kube-apiserver 发送证书签名请求,必须通过后 kubernetes 系统才会将该 Node 加入到集群。

在master 节点操作

$ kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-SAdjCTFtdpaLiRt1oveG7sPeysBxPlUDYJ3SRScQWFg 3m kubelet-bootstrap Pendding $ kubectl certificate approve node-csr-SAdjCTFtdpaLiRt1oveG7sPeysBxPlUDYJ3SRScQWFg

5. Kubernetes 核心 Addons部署

5.1. 部署 flannel

5.1.1. 准备证书

$ mkdir /opt/flannel-v0.11.0

$ tar zxf flannel-v0.11.0

$ ln -s /opt/flannel-v0.11.0 /opt/flannel

$ mkdir /opt/flannel/cert

$ cd /opt/flannel/cert

$ scp /opt/cert/ca.pem .

$ scp /opt/cert/client.pem .

$ scp /opt/cert/client-key.pem .

$ ls /opt/flannel/cert

ca.pem client-key.pem client.pem

5.1.2. 向etcd中写入数据

$ ./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

$ ./etcdctl get /coreos.com/network/config

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

5.1.3. 创建启动脚本

-

创建

subnet.env注意,每个节点中的

FLANNEL_SUBNET对应到 docker中的网断地址cat > /opt/flannel/subnet.env <<EOF FLANNEL_NETWORK=172.7.0.0/16 FLANNEL_SUBNET=172.7.21.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false EOF -

创建启动脚本

注意修改 网卡IP和 网卡名称

cat > /opt/flannel/flanneld.sh <<EOF #!/bin/sh ./flanneld \ --public-ip=192.168.8.50 \ --etcd-endpoints=https://192.168.8.50:2379,https://192.168.8.51:2379,https://192.168.8.52:2379 \ --etcd-keyfile=./cert/client-key.pem \ --etcd-certfile=./cert/client.pem \ --etcd-cafile=./cert/ca.pem \ --iface=eth0 \ --subnet-file=./subnet.env \ --healthz-port=2401 -

创建supervisor配置

cat /etc/supervisord.d/flanneld.ini<<EOF [program:flanneld] command=/opt/flannel/flanneld.sh numprocs=1 directory=/opt/flannel autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,2 stopsignal=QUIT stopwaitsecs=10 user=root redirect_stderr=true stdout_logfile=/data/logs/flanneld/flanneld.stdout.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false EOF

5.1.4. 启动服务

mkdir -p /data/logs/flanneld

supervisorctl update

supervisorctl status

5.1.5. 修改flannel工作模式 (可选)

到目前为止,flannal 安装模式为

host-gw,因为host-gw原理是修改宿主机路由实现,该模式当只有集群节点都在同一局域网络环境中才可以,如果网络更为复杂的情况,可以使用VXLAN模式,当配置Directrouting为true后,flannel 会根据网络环境来判断是否通过节点路由模式到达目的容器.

-

查看当前工作模式

$ /opt/etcd/bin/etcdctl get /coreos.com/network/config {"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}} -

将

host-gw模式改为vxlan$ /opt/etcd/bin/etcdctl update /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "VXLAN","Directrouting":true}}' $ /opt/etcd/bin/etcdctl get /coreos.com/network/config {"Network": "172.7.0.0/16", "Backend": {"Type": "VXLAN","Directrouting":true}} -

重启flannel服务

supervisorctl restart flannel -

查看网卡,出现虚拟网卡flannel.1

$ ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 52:54:00:4a:89:b6 brd ff:ff:ff:ff:ff:ff inet 192.168.8.50/24 brd 192.168.8.255 scope global eth0 valid_lft forever preferred_lft forever 3: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 56:0c:3a:fa:c4:07 brd ff:ff:ff:ff:ff:ff 4: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default link/ether 96:3d:d0:d2:f2:4f brd ff:ff:ff:ff:ff:ff inet 10.244.0.1/32 brd 10.244.0.1 scope global kube-ipvs0 valid_lft forever preferred_lft forever 5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:2a:92:c2:db brd ff:ff:ff:ff:ff:ff inet 172.7.50.1/24 brd 172.7.50.255 scope global docker0 valid_lft forever preferred_lft forever 7: vethc0ef8ad@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 0a:bd:cd:da:b0:13 brd ff:ff:ff:ff:ff:ff link-netnsid 0 8: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default link/ether 5e:24:47:15:9b:af brd ff:ff:ff:ff:ff:ff inet 172.7.50.0/32 scope global flannel.1 valid_lft forever preferred_lft forever

5.2. 优化Flannel SNAT 规则

5.2.1. 问题

进入一个pod中 向其他pod发起curl请求

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-8jv8t 1/1 Running 0 21h 172.7.51.2 k8s-m2 <none> <none>

nginx-bkcct 1/1 Running 1 21h 172.7.50.2 k8s-m1 <none> <none>

nginx-jv8bz 1/1 Running 0 20h 172.7.52.2 k8s-m3 <none> <none>

$ kubectl exec -it nginx-bkcct -- /bin/sh

/ # curl -I 172.7.51.2

HTTP/1.1 200 OK

Server: nginx/1.12.2

Date: Thu, 26 Mar 2020 05:55:10 GMT

Content-Type: text/html

Content-Length: 65

Last-Modified: Fri, 02 Mar 2018 03:39:12 GMT

Connection: keep-alive

ETag: "5a98c760-41"

Accept-Ranges: bytes

查看日志,发现来源地址为宿主机IP地址,并非分配给pod的IP地址

$ kubectl logs -f nginx-8jv8t

192.168.8.50 - - "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0" "-"

5.2.2. 解决

查看宿主机iptables的SNAT规则

$ iptables-save|grep -i postrouting

:POSTROUTING ACCEPT [2:120]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.7.51.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

修改iptables 规则

$ iptables -t nat -D POSTROUTING -s 172.7.51.0/24 ! -o docker0 -j MASQUERADE

$ iptables -t nat -I POSTROUTING -s 172.7.51.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

再进行查看

$ kubectl exec -it nginx-8jv8t -- /bin/sh

/ # curl 172.7.50.2

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

$ kubectl logs -f nginx-bkcct

172.7.51.2 - - "GET / HTTP/1.1" 200 65 "-" "curl/7.67.0" "-"

发现已经是pod的ip地址

kubectl exec 命令

kubectl exec -it <podName> -c <containerName> -n <namespace> -- shell comand

5.3 coredns部署

github 地址 https://github.com/coredns/coredns

k8s 官方部署 coredns 脚本 https://github.com/coredns/deployment/tree/master/kubernetes

其中,

deploy.sh是用来生成运行coredns 的资源清单脚本,coredns.yaml.sed是模板。模板共分为

rabc、ConfigMap、Deployment、Service4部分,

deploy.sh 使用

$ ./deploy.sh -h

usage: ./deploy.sh [ -r REVERSE-CIDR ] [ -i DNS-IP ] [ -d CLUSTER-DOMAIN ] [ -t YAML-TEMPLATE ]

-r : Define a reverse zone for the given CIDR. You may specify this option more

than once to add multiple reverse zones. If no reverse CIDRs are defined,

then the default is to handle all reverse zones (i.e. in-addr.arpa and ip6.arpa)

-i : Specify the cluster DNS IP address. If not specified, the IP address of

the existing "kube-dns" service is used, if present.

-s : Skips the translation of kube-dns configmap to the corresponding CoreDNS Corefile configuration.

脚本中使用 kubectl get service --namespace kube-system kube-dns -o jsonpath="{.spec.clusterIP}" 获取集群dns IP

5.3.1. 部署

根据分类,将官方给出的yaml分为4个yaml文件

-

rabc.yaml:

apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system -

configmap.yaml其中 forward 这可以配置多个upstream 域名服务器,也可以用于延迟查找 /etc/resolv.conf 中定义的域名服务器

apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local 10.244.0.0/16 in-addr.arpa ip6.arpa { fallthrough in-addr .arpa ip6.arpa } prometheus :9153 forward . 192.168.8.22 cache 30 loop reload loadbalance } -

service.yamlapiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.244.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP -

deployment.yamldeployment 中 配置了

livenessProbe(8080端口)、readinessProbe(8181端口) 用来检查coredns是否可以正常启动apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.6.7 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile -

依次创建四个资源清单

$ kubectl apply -f rbac.yaml $ kubectl apply -f configmap.yaml $ kubectl apply -f service.yaml $ kubectl apply -f deployment.yaml -

检查是否启动成功

$ kubectl get all -n kube-system NAME READY STATUS RESTARTS AGE pod/coredns-54d5b4c8c-drgl8 1/1 Running 0 30m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kube-dns ClusterIP 10.244.0.2 <none> 53/UDP,53/TCP,9153/TCP 79m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/coredns 1/1 1 1 30m NAME DESIRED CURRENT READY AGE replicaset.apps/coredns-54d5b4c8c 1 1 1 30m

5.3.2. 测试

-

解析外网域名测试

$ dig -t A www.baidu.com @10.244.0.2 +short www.a.shifen.com. 220.181.38.150 220.181.38.149 -

解析集群svc测试

# 创建deployment $ kubectl create deploy nginx-dp --image=nginx:1.7.9 -n kube-public # 创建svc $ kubectl -n kube-public expose deploy nginx-dp --port=80 # 查看svc $ kubectl -n kube-public get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-dp ClusterIP 10.244.92.34 <none> 80/TCP 11m # 测试 $ dig -t A @10.244.0.2 nginx-dp.kube-public.svc.cluster.local. +short 10.244.92.34 -

不同命名空间内的pod 解析测试

进入default命名空间的容器内部,ping

kube-public命名空间的svc$ kubectl get pod -n default NAME READY STATUS RESTARTS AGE nginx-8bqf2 1/1 Running 0 3h33m nginx-8jv8t 1/1 Running 0 46h nginx-8xn9p 1/1 Running 1 4h11m nginx-ccfmb 1/1 Running 0 21h nginx-wdlzc 1/1 Running 0 4h11m $ kubectl exec -it nginx-8bqf2 -- /bin/sh / # ping nginx-dp.kube-public PING nginx-dp.kube-public (10.244.92.34): 56 data bytes 64 bytes from 10.244.92.34: seq=0 ttl=64 time=0.045 ms 64 bytes from 10.244.92.34: seq=1 ttl=64 time=0.170 ms ...

外网测试 与 内部svc 测试均正常,证明coredns部署成功

5.4. Traefik 服务暴露

github 地址 https://github.com/containous/traefik

ingress就是从kubernetes集群外访问集群的入口,将用户的URL请求转发到不同的service上。Ingress相当于nginx、apache等负载均衡反向代理服务器,其中还包括规则定义,即URL的路由信息

5.4.1. 部署 Traefik

创建以下四个资源清单

-

rbac.yaml

--- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller rules: - apiGroups: - "" resources: - services - endpoints - secrets verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses/status verbs: - update --- apiVersion: v1 kind: ServiceAccount metadata: name: traefik-ingress-controller namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: traefik-ingress-controller subjects: - kind: ServiceAccount name: traefik-ingress-controller namespace: kube-system -

service.yaml

kind: Service apiVersion: v1 metadata: name: traefik-ingress-service namespace: kube-system spec: selector: k8s-app: traefik-ingress-lb ports: - protocol: TCP port: 80 name: controller - protocol: TCP port: 8080 name: admin-web -

ingress.yaml

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: traefik-web-ui namespace: kube-system annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: traefik.ts.com http: paths: - path: / backend: serviceName: traefik-ingress-service servicePort: 8080 -

ds.yaml 其中

https://192.168.8.49:7443更改为自己的api serverVIP地址与端口--- kind: DaemonSet apiVersion: extensions/v1beta1 metadata: name: traefik-ingress-controller namespace: kube-system labels: k8s-app: traefik-ingress-lb spec: selector: matchLabels: k8s-app: traefik-ingress-lb name: traefik-ingress-lb template: metadata: labels: k8s-app: traefik-ingress-lb name: traefik-ingress-lb spec: serviceAccountName: traefik-ingress-controller terminationGracePeriodSeconds: 60 containers: - image: traefik:v1.7.2 name: traefik-ingress-lb ports: - name: http containerPort: 80 hostPort: 81 - name: admin containerPort: 8080 hostPort: 8081 securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE args: - --api - --kubernetes - --logLevel=INFO - --insecureskipverify=true - --kubernetes.endpoint=https://192.168.8.49:7443 - --accesslog - --accesslog.filepath=/var/log/traefik_access.log - --traefiklog - --traefiklog.filepath=/var/log/traefik.log - --metrics.prometheus

开始创建

kubectl apply -f rbac.yaml

kubectl apply -f ingress.yaml

kubectl apply -f service.yaml

kubectl apply -f ds.yaml

5.4.2. 配置nginx 代理

nginx 添加反向代理,

$ cat traefik.ts.com.conf

upstream default_backend_traefik {

server 192.168.8.50:81 max_fails=3 fail_timeout=10s;

server 192.168.8.51:81 max_fails=3 fail_timeout=10s;

server 192.168.8.52:81 max_fails=3 fail_timeout=10s;

server 192.168.8.54:81 max_fails=3 fail_timeout=10s;

server 192.168.8.61:81 max_fails=3 fail_timeout=10s;

}

server {

listen 80;

server_name *.ts.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

access_log logs/access.ts.log main;

error_log logs/error.ts.log;

}

在 hosts 或者本地dns 添加解析:

192.168.8.22 traefik.ts.com

其中 192.168.8.22 为 nginx 服务器地址

在浏览器输入 http://traefik.ts.com 查看

5.5. 部署 dashboard

5.6. 部署metrics server

k8s 通过 metrics server 可以收集监控pod的CPU、内存的利用率,用来实现弹性伸缩自动调整Pod的数量(DaemonSet 目前不支持)

5.6.1. 生成证书

$ cd /opt/cert/

$ cat > metrics-proxy-csr.json <<EOF

{

"CN": "system:metrics-server",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

$ cfssl gencert -ca ca.pem -ca-key ca-key.pem -config ca-config.json -profile peer metrics-proxy-csr.json|cfssljson -bare metrics-proxy

$ ls metrics-proxy*

metrics-proxy.csr metrics-proxy-csr.json metrics-proxy-key.pem metrics-proxy.pem

将证书同步到每台master节点的证书目录 /opt/kubernetes/server/bin/cert/ 下,修改apiserver 启动脚本

$ vi kube-apiserver.sh

...

...

# 添加如下内容

--proxy-client-cert-file ./cert/metrics-proxy.pem \

--proxy-client-key-file ./cert/metrics-proxy-key.pem \

重启kube-apiserver服务

注意

如果不指定证书metricspod在启动后可能会报unable to fetch metrics from kubelet request failed - "401 unauthorized", resp等错误

5.6.2. 安装

克隆项目

$ git clone https://github.com/kubernetes-sigs/metrics-server.git

$ cd metrics-server

$ git checkout release-0.3

$ cd deploy/1.8+

$ ls

aggregated-metrics-reader.yaml auth-reader.yaml metrics-server-deployment.yaml resource-reader.yaml

auth-delegator.yaml metrics-apiservice.yaml metrics-server-service.yaml

修改metrics-server-deployment.yaml中image地址,加上启动命令

containers:

- name: metrics-server

image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalDNS,ExternalIP,Hostname

- --kubelet-insecure-tls

创建

$ kubectl apply -f ./

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

$ kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

coredns-54d5b4c8c-2nm67 1/1 Running 0 27h

kubernetes-dashboard-85d4f56ddc-45pn5 1/1 Running 0 120m

metrics-server-7fc555984-ddl9f 1/1 Running 0 18s

查看启动日志

$ kubectl -n kube-system logs -f metrics-server-7fc555984-ddl9f

I0415 08:42:18.690008 1 serving.go:312] Generated self-signed cert (/tmp/apiserver.crt, /tmp/apiserver.key)

I0415 08:42:19.384377 1 secure_serving.go:116] Serving securely on [::]:4443

证明pod启动成功,使用 top命令检查 pod、node 资源利用率

$ kubectl top node

Error from server (Forbidden): nodes.metrics.k8s.io is forbidden: User "system:metrics-server" cannot list resource "nodes" in API group "metrics.k8s.io" at the cluster scope

报上面错误, system:metrics-server 用户 没有权限

可以将 system:metrics-server 绑定到 cluster-admin 角色测试

$ kubectl create clusterrolebinding cluster-kube-proxy --clusterrole=cluster-admin --user=system:metrics-server

clusterrolebinding.rbac.authorization.k8s.io/cluster-kube-proxy created

$ kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-m1 270m 13% 1331Mi 70%

k8s-m2 216m 10% 1298Mi 68%

k8s-m3 197m 9% 1394Mi 73%

k8s-n1 165m 8% 1260Mi 66%

k8s-n2 59m 2% 485Mi 54%

k8s-n3 60m 1% 990Mi 52%

k8s-n4 56m 1% 643Mi 37%

因为将system:metrics-server 用户绑定到了cluster-admin角色,所以就不会报权限不够的错误了,但是不推荐这么使用。

删除角色绑定

$ kubectl delete clusterrolebinding cluster-kube-proxy

clusterrolebinding.rbac.authorization.k8s.io "cluster-kube-proxy" deleted

修改 resource-reader.yaml 中角色权限

$ vim resource-reader.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- metrics.k8s.io

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

- kind: User

apiGroup: rbac.authorization.k8s.io

name: system:metrics-server

# 提交配置

$ kubectl apply -f resource-reader.yaml

clusterrole.rbac.authorization.k8s.io/system:metrics-server unchanged

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server configured

# 测试查看

$ kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-m1 281m 14% 1259Mi 66%

k8s-m2 215m 10% 1241Mi 65%

k8s-m3 201m 10% 1281Mi 67%

k8s-n1 162m 8% 1264Mi 66%

k8s-n2 62m 3% 485Mi 54%

k8s-n3 54m 1% 988Mi 52%

k8s-n4 63m 1% 643Mi 37%